Installing RVTools?

This day and age with different types of cloud and different directions Admins, Architects and Engineers need to have an easy way to pull information from VMware. RVTools is a free utility that you get here. As of March 2019 a revised tool with new features has been released in version 3.11.6 The last revision was over a year ago so it is worth the time to take a look at the new version. Features I found interesting.

- vInfo tab page new column: Creation date virtual machine

- vInfo tab page new columns: Primary IP Address and vmx Config Checksum

- vInfo tab page new columns: log directory, snapshot and suspend directory

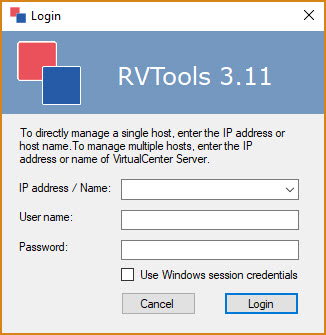

Go download it and run the RVTools.msi. (Should be a series of left clicks and done depending on personal requirements.)

When the install is done you should be able to go to Start Menu–> All Programs –> RVTools

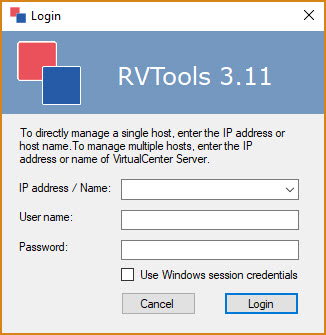

The IP address that is being asked for should be the one that has vCenter running. This gives you what you are needing from a single location. If you are not using vCenter you can go and run against each ESXi hosts but will need to hit each one individually.

How do you use the tool?

Login and authenticate. As soon as you log in, you can see all the information about your virtual environment in the home screen.

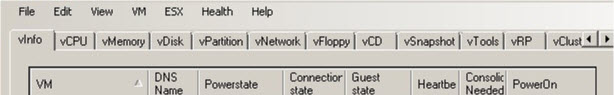

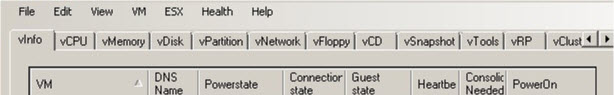

When you are in the tool you can go to File–> Export to Excel to get all the information in a digestible format. Most people that are working with a customer environment will only need Tab vInfo which is the first tab.

vInfo

Gives details about the Virtual Machines and its health status like Name, Power State, Config Status, Number of CPU’s, Memory, Storage and the HW Version which is important at times when using 3rd party tools.

Other Tabs

More information can be digested but it is going to be more of an administration directive to know those specifics or if you are trying to diagnose the environment from the export sometimes it can help too.

- vCPU – Talks about all things processor from sockets to entitlements.

- vMemory – Memory utilization and overhead can help in right sizing.

- vDisk – Talks about capacity and controllers and modes.

- vPartition – which partitions are active and other disk information

- vNetwork – Adapters, IP address assignments and connected values.

- vFloppy – Interesting one if you actually use it.

- vCD – Like the floppy but geared towards the CDROM.

- vSnapshot – This one is important in that it can provide the Tools Version and upgrade flag which can be very important when migration needs the granularity.

- vRP – Resource Pools for VM but only if you interested in the resevations needed.

- vCluster – displays information about each cluster specific to the name and status of the cluster, along with VM’s per core on the cluster. Troubleshooting assistance for sure.

- vHBA – Again all about the name and the specifics on Name, Drive, Device Type, Bus WW Name, PCI address

- vNIC – physical network such as host name, datacenter name, cluster name, network name, driver, device type, switch, speed, duplex switch

- vSwitch – Every virtual switch is located here which can help if you are troubleshooting them or moving applications.

- vPort – All in the name and what each port does, port group, VLAN ID

- vLicense – All in the name, information on your licenses. Name of licensed product, key, labels, and expiration date. Which licenses are currently used.

- More tabs, but the ones I am familiar with and use.